Frequently asked questions (FAQ)

How do you guarantee that data on DBnomics is correct?

In short, there is no formal guarantee, but...

Fetcher developers use a validation script in order to detect some errors automatically.

Of course a program can't verify the correctness of the floating point values. To do that, manual checks have to be done against the original source data on the provider website. DBnomics core team has economists that verify manually data produced by a fetcher, once developers have finished to write it.

Finally, as many free software projects, DBnomics quality is improved each time its users contribute, and reporting problems with data is really helpful.

See also: acceptation process for a fetcher.

I like DBnomics, how can I contribute?

Well, glad you like it :)

You can contribute by several ways:

- by reporting problems with data

- by participating to discussions on the community forum, becoming an active community member

- by writing libraries to access data from programming languages

- by writing new fetchers to support new providers or datasets

- financially, to help the core team spending more time on maintainance and developing new features: contact us

- for institutions, by joining the steering committee of DBnomics: contact us

How can I contact DBnomics core team?

- by email: contact@nomics.world

- on the community forum

- on Twitter: @DBnomics

How to download data from LibreOffice Calc?

Please read: download data.

I've seen a problem with data on DBnomics, what should I do?

Please read: report problems with data.

When is data updated?

Updating data as soon as the provider publishes it is a major concern. Here are more explanations about how it works.

DBnomics data, distributed by its web API, is updated daily (most of the time) by fetchers.

There can be 2 sorts of delays:

- the delay between the publication of new data on provider side, and the next execution of the corresponding fetcher,

- and the delay due to a failure of the fetcher, requiring manual intervention.

The delay can be reduced when providers publish a log of data updates (e.g. IMF, INSEE, Eurostat) which is small enough to be checked more often (e.g. hourly). In this case, the download is trigger only if needed, and applies only to data that was updated since the last fetcher execution. We call this the incremental mode.

Where do I find the source code of the fetcher of a specific provider?

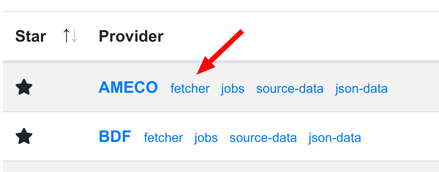

Use the dashboard that lists all the providers, each having a link to the repository of the source code of its corresponding fetcher.

Can I have my private data on DBnomics?

DBnomics is a freely accessible project which does not require its users to authenticate themselves. Therefore if private data was added, it would violate its licence or terms of use.

Please contact us if you're interested in using private data with DBnomics, we'd like to know more about your needs.

Can I install a private instance of DBnomics?

It is technically possible to install manually another instance of DBnomics. Some institutions may want to use such an instance to work on private data.

However the different instances would not be federated as DBnomics has not been designed with this need in mind.

Some technical background: DBnomics is currently deployed manually on servers, i.e. without using containers or a container orchestration platform such as Kubernetes. Some work has been done to create containers for the main services of the architecture, but the project still needs to be deployed manually.

Why do fetchers break after a while?

If you look at the dashboard, you will see that many fetchers are in error status with red icons.

Fetchers download and convert data from providers, but the provider website, web API, or data can evolve in an unpredictible way.

Fetchers stability is proportional to providers stability. This includes a stable infrastructure, stable API URLs, stable responses, stable data model, etc.

Fetcher maintainance takes a big part of the time spent on DBnomics.

Which data sources are easier to process with fetchers?

Data distributed in manually formatted Excel files il difficult to process by fetchers, mainly because data is not produced systematically.

For example, having cells with a color background to add semantics to data is quite annoying to process, as well as handling merged cells, or data tables shifted below a header, or having a footer with notes.

In the other hand, data distributed in raw files like XML, JSON or CSV are way easier to work with, because there are almost no particular cases to handle.

Another aspect is the transport layer: it is easier to download a big ZIP file containing raw data files and process them locally (like Eurostat does), than to have to scrape data from a website in HTML, or query it from an API that throttles HTTP requests.

Why does DBnomics use Git to store data?

DBnomics fetchers store downloaded and convert time series as files and directories in Git repositories.

This design is quite uncommon and this question was raised many times. Indeed DBnomics does not use a regular database to store time series.

The main reasons that lead the developers to choose Git are:

- keep track of every data revision: this works out of the box with Git by making use of commits

- support data models from source providers: DBnomics provider data as-is (e.g. Excel, XML, CSV, etc.)

- DBnomics data model is document oriented, and this works well with files and directories

- do not depend on an infrastructure: fetchers should be easy to develop and contribute, and writing scripts that output files, without needing to run a local database, seems the simplest solution

- allow users to consume data by cloning the repository directly, and pulling changes afterwards

- Git stores data using a copy on write strategy: in short, if only a few bytes change in a commit, only the difference will be stored

Git adoption comes with drawbacks:

- performance when Git repositories contain either too many files or big files: committing, pushing and pulling becomes slow

- accessing past revisions without checkouting files requires loading the whole blob (i.e. file) in memory

- using Git Large File Storage (LFS) does not store data using a copy on write strategy

In the future, DBnomics could use a more efficient storage backend, which remains to be chosen after benchmarking several options. This solution would need to support at least data revisions, querying data with as of timestamp or as of revision queries, be distributed for better availability and performance, allow storing unstructured or structured data, store data using copy on write, allow cloning the database locally and pull changes.

DBnomics core team keeps an eye on different technologies related to data lakes, cloud-native databases, or Git-like databases.